介绍

一个批量爬取写真网站的爬虫,无聊随便写的。代码也非常的简单,随便分享一下吧

站点:http://www.tdz4.com/

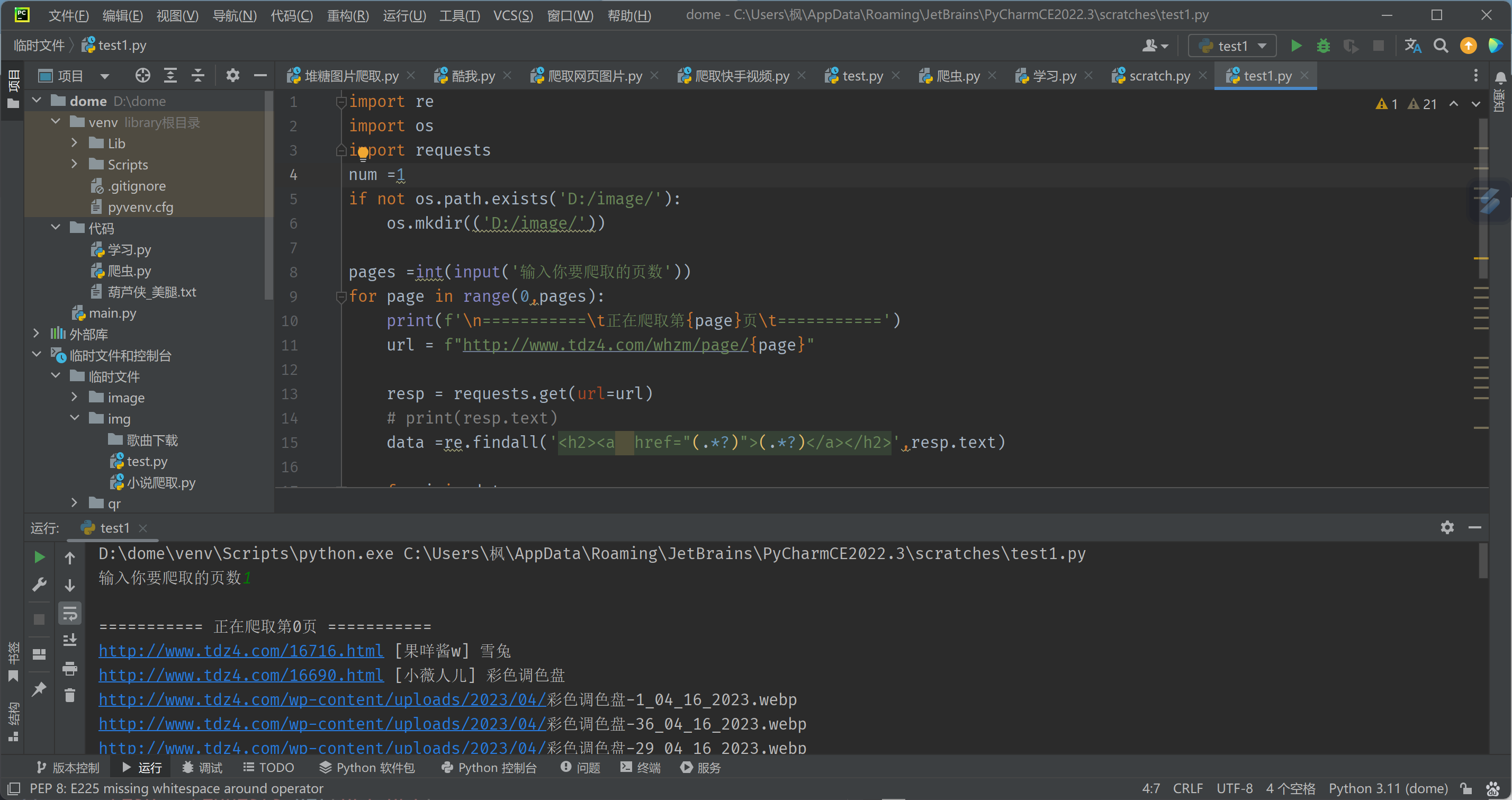

效果

代码

import re

import os

import requests

num =1

if not os.path.exists('D:/image/'):

os.mkdir(('D:/image/'))

pages =int(input('输入你要爬取的页数'))

for page in range(0,pages):

print(f'\n===========\t正在爬取第{page}页\t===========')

url = f"http://www.tdz4.com/whzm/page/{page}"

resp = requests.get(url=url)

# print(resp.text)

data =re.findall('<h2><a href="(.*?)">(.*?)</a></h2>',resp.text)

for i in data:

name =i[1]

img_url=i[0]

print(img_url,name)

data =requests.get(url=img_url)

img_url= re.findall('data-src="(.*?)"> <img',data.text)

for a in img_url:

print(a)

data =requests.get(url=a).content

with open(f'D:/image/{name}{num}.jpg',"wb")as f:

f.write(data)

num +=1

print('\n\n===========\t爬取完毕!!!===========\t')

print(f'\n共爬取{num-1}张')

爬取url链接

差不多,上面代码是爬取图片下载,下面是爬取图片url然后保存到文本!

import re

import os

import requests

num =1

if not os.path.exists('D:/image/'):

os.mkdir(('D:/image/'))

pages =int(input('输入你要爬取的页数'))

for page in range(0,pages):

print(f'\n===========\t正在爬取第{page}页\t===========')

url = f"http://www.tdz4.com/whzm/page/{page}"

resp = requests.get(url=url)

# print(resp.text)

data =re.findall('<h2><a href="(.*?)">(.*?)</a></h2>',resp.text)

for i in data:

name =i[1]

img_url=i[0]

print(img_url,name)

data =requests.get(url=img_url)

img_url= re.findall('data-src="(.*?)"> <img',data.text)

for a in img_url:

print(a)

data =requests.get(url=a).content

with open(f'写真.txt', mode='a', encoding='utf-8', ) as f: # 保存路径,追加写入

f.write(a)

f.write('\n')

num +=1

print('\n\n===========\t爬取完毕!!!===========\t')

print(f'\n共爬取{num-1}张')

评论 (0)