介绍

学了python两天左右弄懂了这个爬虫,然后呢我也是小白,

发这个贴子主要是记录学习心得(大佬回避  )

)

先写一个简单示例

如下:

import request#引入库

url 'http://baidu.com' #设置爬取网页

res = requests.get(url)#get请求

print(res.text)#打印输出

json取值

re正则表达式替换内容

open保存模块安装方法

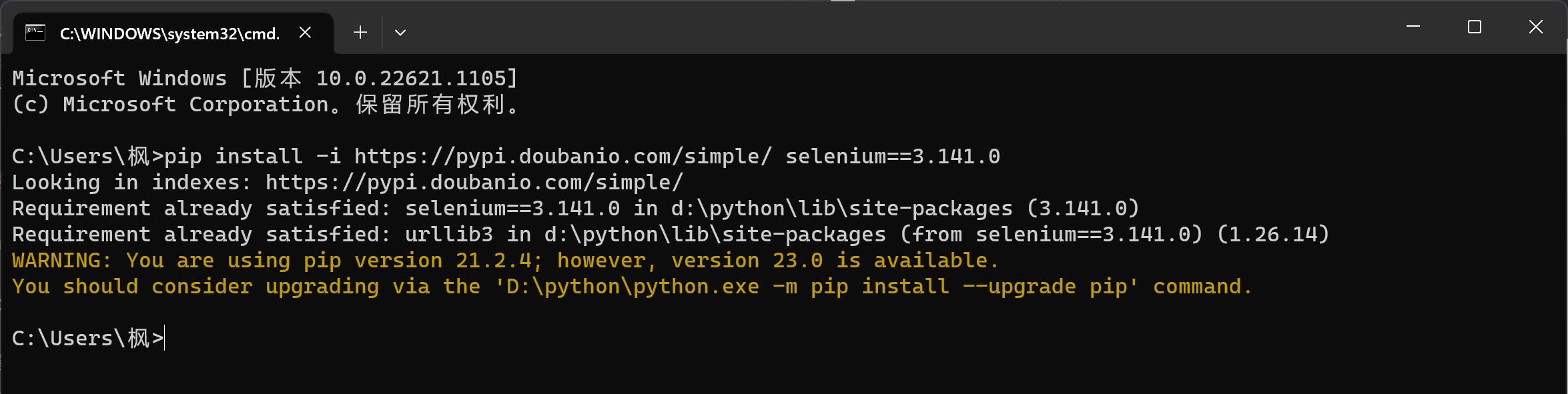

如果安装python第三方模块:

win + R 输入 cmd 点击确定, 输入安装命令

pip install 模块名 (pip install requests) 回车

可能出现大量报红 (read time out)

解决方法: 因为是网络链接超时, 需要切换镜像源 SSL

- 清华:https://pypi.tuna.tsinghua.edu.cn/simple

- 阿里云:https://mirrors.aliyun.com/pypi/simple/

- 中国科技大学 https://pypi.mirrors.ustc.edu.cn/simple/

- 华中理工大学:https://pypi.hustunique.com/

- 山东理工大学:https://pypi.sdutlinux.org/

- 豆瓣:https://pypi.douban.com/simple/

例如:pip install -i https://pypi.doubanio.com/simple/ 模块名

pip install -i https://pypi.doubanio.com/simple/ selenium==3.141.0

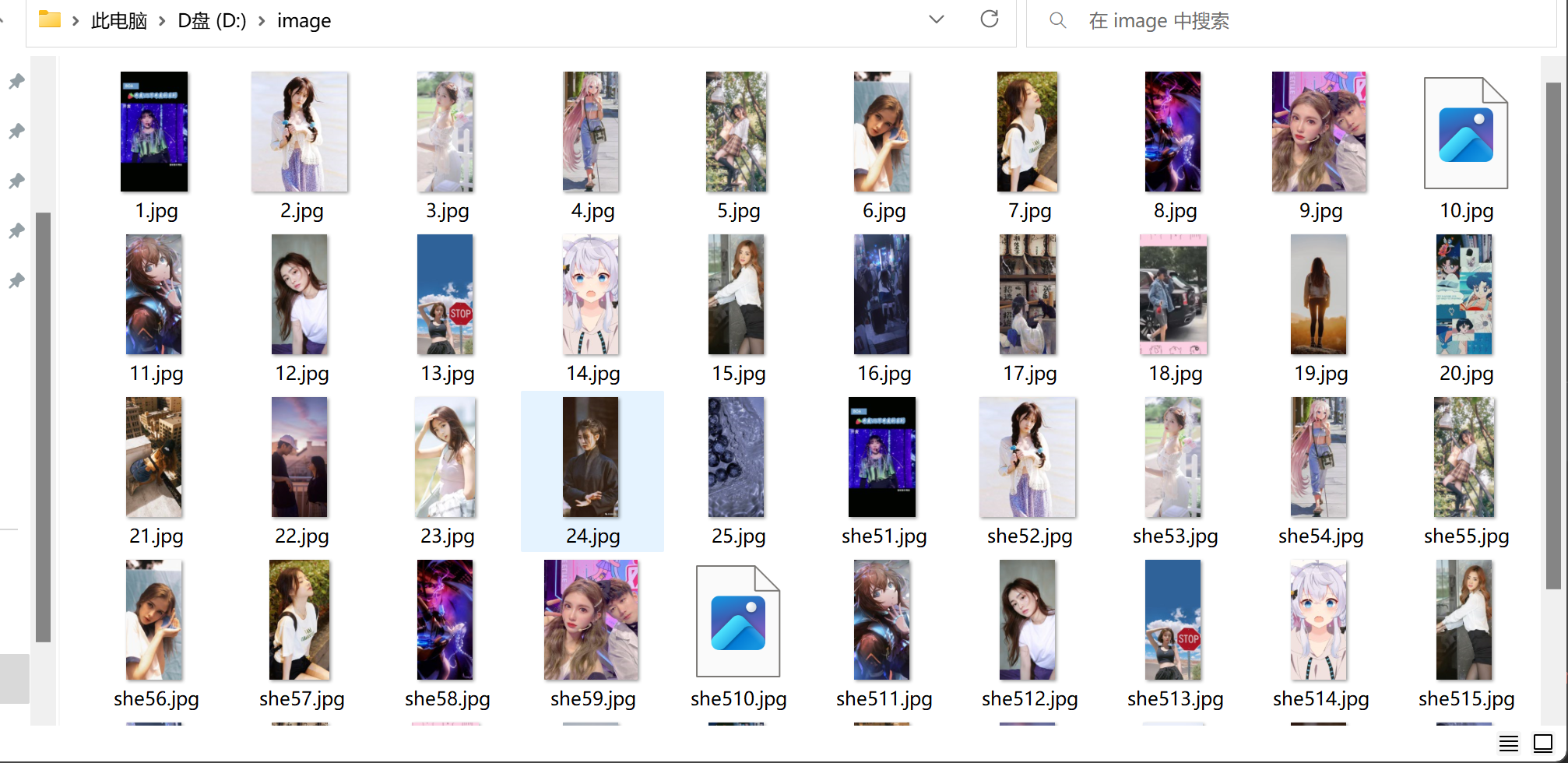

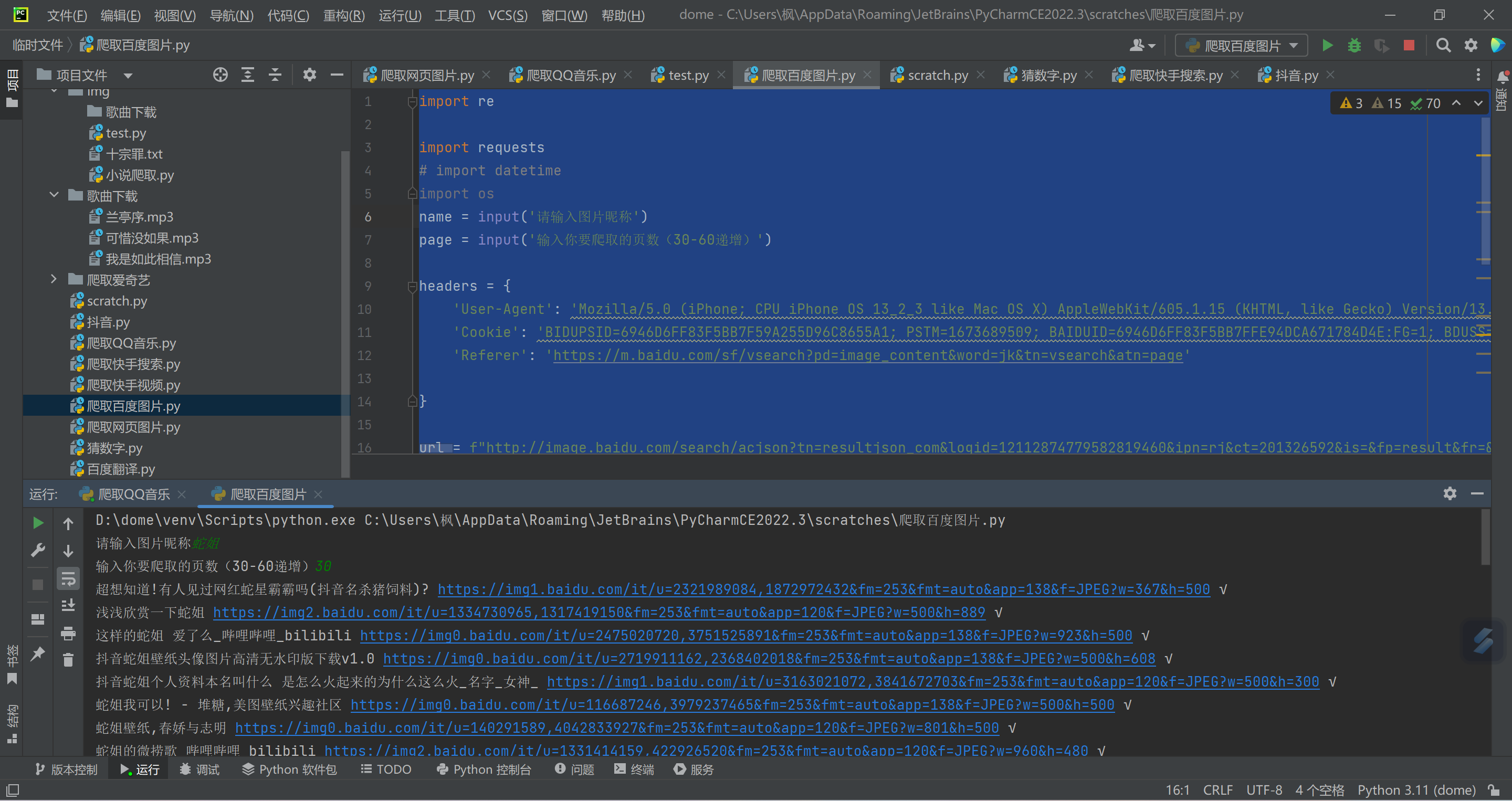

下面为爬取百度图片代码

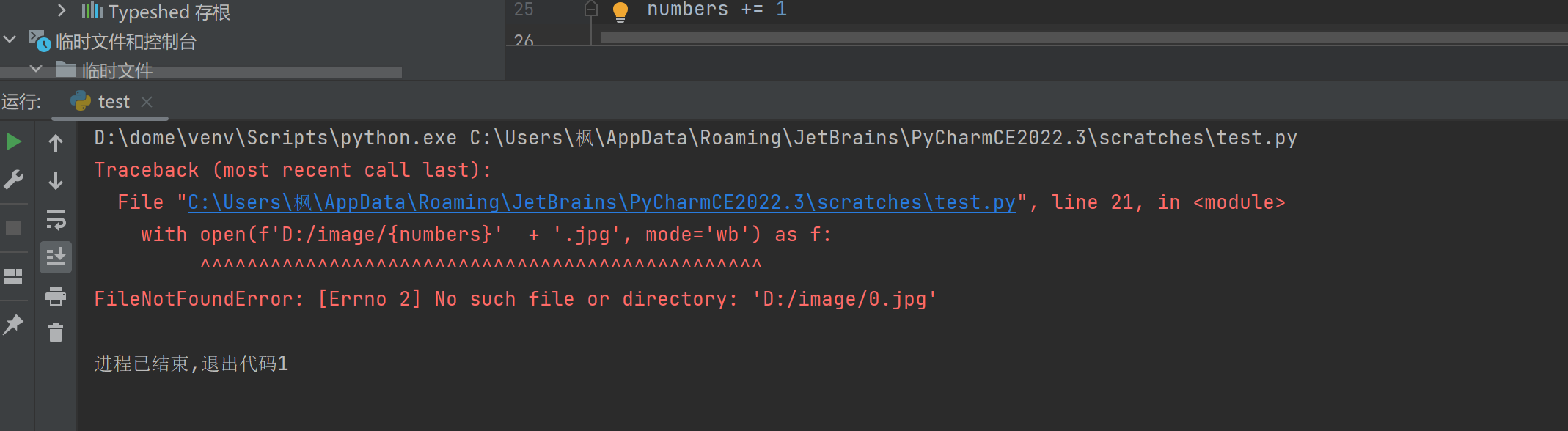

ps:需要再 D盘新建image文件夹

否则会报错

如下:

代码

import re

# 正则表达式

import requests

# import datetime

import os

name = input('请输入图片昵称')

headers = {

'User-Agent': 'Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.3 Mobile/15E148 Safari/604.1 Edg/109.0.0.0',

'Cookie': 'BIDUPSID=6946D6FF83F5BB7F59A255D96C8655A1; PSTM=1673689509; BAIDUID=6946D6FF83F5BB7FFE94DCA671784D4E:FG=1; BDUSS=FBvUThma1JEQ0s5Y2lITGlPUTlBVDd3SDdFMU00dXdwNWE0d35CbWtSek9OZ1JrSUFBQUFBJCQAAAAAAAAAAAEAAABFzoozY2hlbm51b2tlamkAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAM6p3GPOqdxjT; BDUSS_BFESS=FBvUThma1JEQ0s5Y2lITGlPUTlBVDd3SDdFMU00dXdwNWE0d35CbWtSek9OZ1JrSUFBQUFBJCQAAAAAAAAAAAEAAABFzoozY2hlbm51b2tlamkAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAM6p3GPOqdxjT; BDSFRCVID=I1kOJeC6275VcNojIB9aui_0SmkdVk7TH6_nezkj1ADf7HHyXk7WEG0PBM8g0KubRyJSogKKLmOTHpCF_2uxOjjg8UtVJeC6EG0Ptf8g0f5; H_BDCLCKID_SF=JnPeVItatIK3qnO4DITS2tI0-eT22jPjWec9aJ5nJDobVDJRQJj8-T50Qho0Qb5My6rbhxjvQpP-_nrv5b3kBP5XLxLO2ncMBIOmKl0MLpoYbb0xynoYbUPq2MnMBMnramOnaPJn3fAKftnOM46JehL3346-35543bRTLnLy5KJtMDFRDTLMejOXjHR22Pc22-oKWnTXbRu_Hn7zeUuaMU4pbt-qJj-tQD7wWbcY0DTHfJT3DhbVMP0ZMNJnBT5daDTEBbFh0lbtMpnS2q7Ojp-kQN3T3fuO5bRiLRo4Mlc6Dn3oyUvVXp0nK-bly5jtMgOBBJ0yQ4b4OR5JjxonDh83bG7MJPKtfD7H3KChtCt5hU5; H_PS_PSSID=36559_37551_37518_38091_38124_37910_37990_37795_36803_37933_38086_38041_26350_37957_38100_38008_37881; BAIDUID_BFESS=6946D6FF83F5BB7FFE94DCA671784D4E:FG=1; BDSFRCVID_BFESS=I1kOJeC6275VcNojIB9aui_0SmkdVk7TH6_nezkj1ADf7HHyXk7WEG0PBM8g0KubRyJSogKKLmOTHpCF_2uxOjjg8UtVJeC6EG0Ptf8g0f5; H_BDCLCKID_SF_BFESS=JnPeVItatIK3qnO4DITS2tI0-eT22jPjWec9aJ5nJDobVDJRQJj8-T50Qho0Qb5My6rbhxjvQpP-_nrv5b3kBP5XLxLO2ncMBIOmKl0MLpoYbb0xynoYbUPq2MnMBMnramOnaPJn3fAKftnOM46JehL3346-35543bRTLnLy5KJtMDFRDTLMejOXjHR22Pc22-oKWnTXbRu_Hn7zeUuaMU4pbt-qJj-tQD7wWbcY0DTHfJT3DhbVMP0ZMNJnBT5daDTEBbFh0lbtMpnS2q7Ojp-kQN3T3fuO5bRiLRo4Mlc6Dn3oyUvVXp0nK-bly5jtMgOBBJ0yQ4b4OR5JjxonDh83bG7MJPKtfD7H3KChtCt5hU5; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; BDRCVFR[dG2JNJb_ajR]=mk3SLVN4HKm; BDRCVFR[-pGxjrCMryR]=mk3SLVN4HKm; delPer=0; IMG_BR_CLK=YES; POLYFILL=0; BDRCVFR[5GQZCjFg8mf]=mk3SLVN4HKm; ab_sr=1.0.1_Y2UwMDJiOTgzODExZGRmNWNiZDZhZmFmYTNlNzNmMzE2NDM0ZTI4MjRhMGNlMzZkZWI3MTA3MzdmMDZjYzZiNDhkNTQyYTQzYjBiNzI4YWE4MDRhOTY3Zjk5OTlkOGU5ZGNhODYwODFiMjQwMzdkYzdmNTc0YjVjNDBkZjlmM2ZmYTg2NjJjYTUwNDg4ZWUyZjUwNmU2ZTJhZDQxZWUzNjFmODdiYjVjZGFhYzk3MjNkMWMyMzczN2JmMmY4OTgz; rsv_i=1e31gfvK1rhsKKtKfksQcSANe+lsro6kfEPZTgy+Ci5xZ0uX9nqXd72/2fslVEVzRdCcOf7F61kR6E2c458F4oldTFgZv4I; BDSVRTM=316; IMG_WH=966_1196; BDSVRBFE=Go; H_WISE_SIDS=219946_232280_231979_234925_219623_237837_232777_232055_236810_234020_232247_238890_238413_240447_240465_240790_239491_216844_213345_229967_214798_235965_219943_213042_204909_241245_238073_230288_240965_242157_242311_241698_242546_242489_242753_242757_242545_242900_242336_239308_110085_240597_227869_242188_234208_243510_236308_243594_242517_243642_243842_243706_242128_243874_241737_244320_244311_244007_244398_244032_244641_232628_244725_243425_244769_244830_240595_244957_245003_242380_242374_235442_239851_243208_245083_245271_197096_244446_245412_242682_245234_244984_245472_245519_245501_245511_245509_245563_245653_224436_245763_245771_8000059_8000108_8000113_8000133_8000136_8000149_8000155_8000162_8000165_8000172_8000177_8000183_8000185_8000190; H_WISE_SIDS_BFESS=219946_232280_231979_234925_219623_237837_232777_232055_236810_234020_232247_238890_238413_240447_240465_240790_239491_216844_213345_229967_214798_235965_219943_213042_204909_241245_238073_230288_240965_242157_242311_241698_242546_242489_242753_242757_242545_242900_242336_239308_110085_240597_227869_242188_234208_243510_236308_243594_242517_243642_243842_243706_242128_243874_241737_244320_244311_244007_244398_244032_244641_232628_244725_243425_244769_244830_240595_244957_245003_242380_242374_235442_239851_243208_245083_245271_197096_244446_245412_242682_245234_244984_245472_245519_245501_245511_245509_245563_245653_224436_245763_245771_8000059_8000108_8000113_8000133_8000136_8000149_8000155_8000162_8000165_8000172_8000177_8000183_8000185_8000190; __bsi=11641568841083099398_00_28_R_R_17_0303_c02f_Y',

'Referer': 'https://m.baidu.com/sf/vsearch?pd=image_content&word=jk&tn=vsearch&atn=page'

# 模拟请求头

}

if not os.path.exists('D:/image/'):

os.mkdir(('D:/image/'))

for page in range(1,10):

url = f"https://image.baidu.com/search/acjson?tn=resultjson_com&logid=12112874779582819460&ipn=rj&ct=201326592&is=&fp=result&fr=&word={name}&queryWord={name}&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=&z=&ic=&hd=&latest=©right=&s=&se=&tab=&width=&height=&face=&istype=&qc=&nc=1&expermode=&nojc=&isAsync=&pn={page * 30}&rn=30&gsm=1e&1677171694590="

# 请求链接

response = requests.get(url=url, headers=headers)

# requests.get获取数据

p_url = response.json()['data']

# json取值

for i in range(29):

img_url = p_url[i]['hoverURL']

# 取图片链接

img_title = p_url[i]['fromPageTitle']

title = re.sub('[\\/:*?<>✘|\\n#@)\》》】【—_\-# - \."\《(\r]', ' ', img_title)

# 取图片标题

print(title, img_url, '√')

# 正则表达式替换特殊符号

res = requests.get(url=img_url, headers=headers)

# get请求数据

with open(f'D:/image/{title}''.jpg', mode='wb') as f:

# 设置保存路径,写入格式

f.write(res.content)

# 保存

评论 (0)