介绍

捣鼓了几下,然后看着视频写出来了,纯属小白

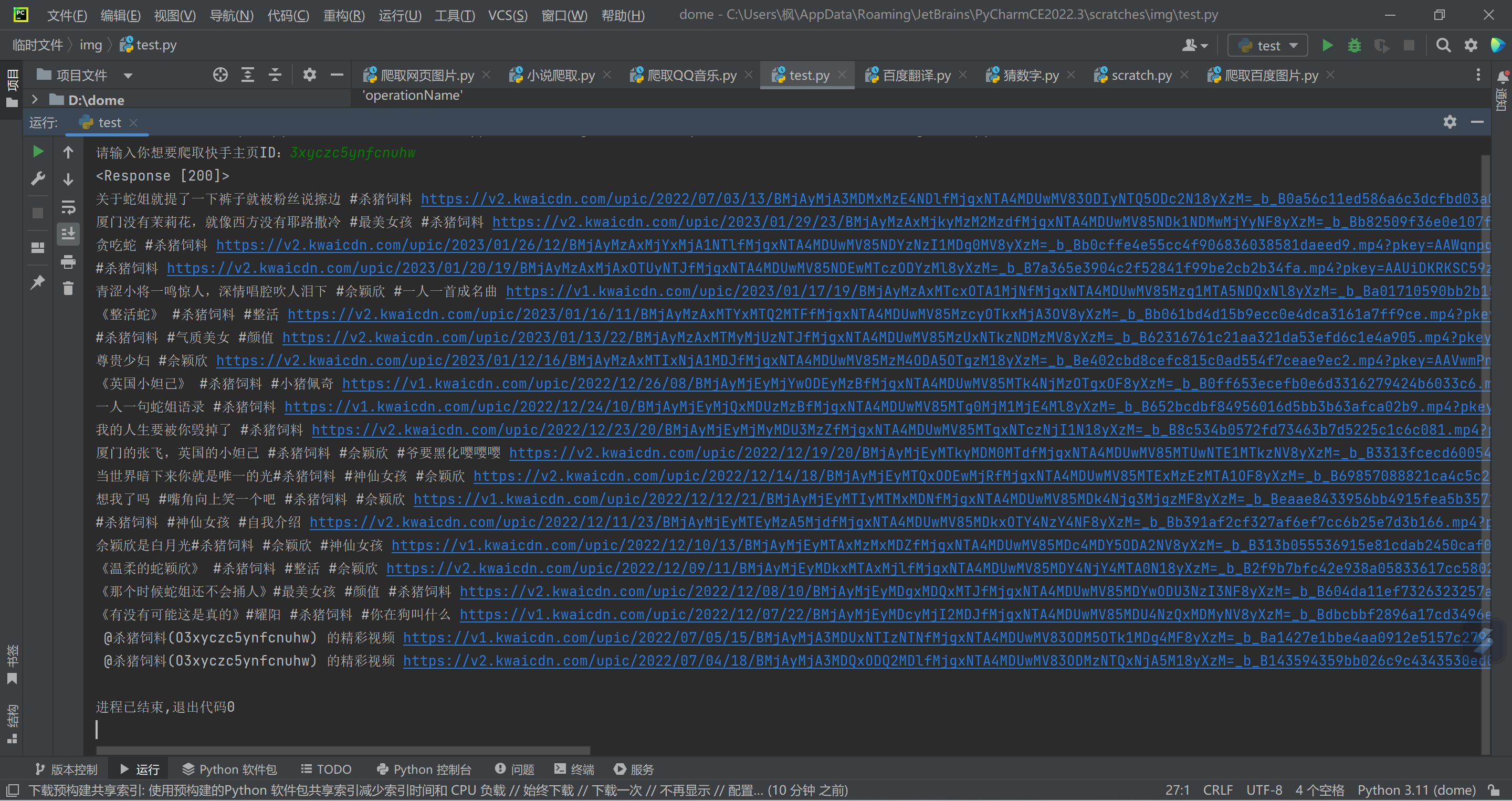

这个可以爬取快手主页视频,然后没有什么技术含量,然后在这分享一下,学习记录!

操作

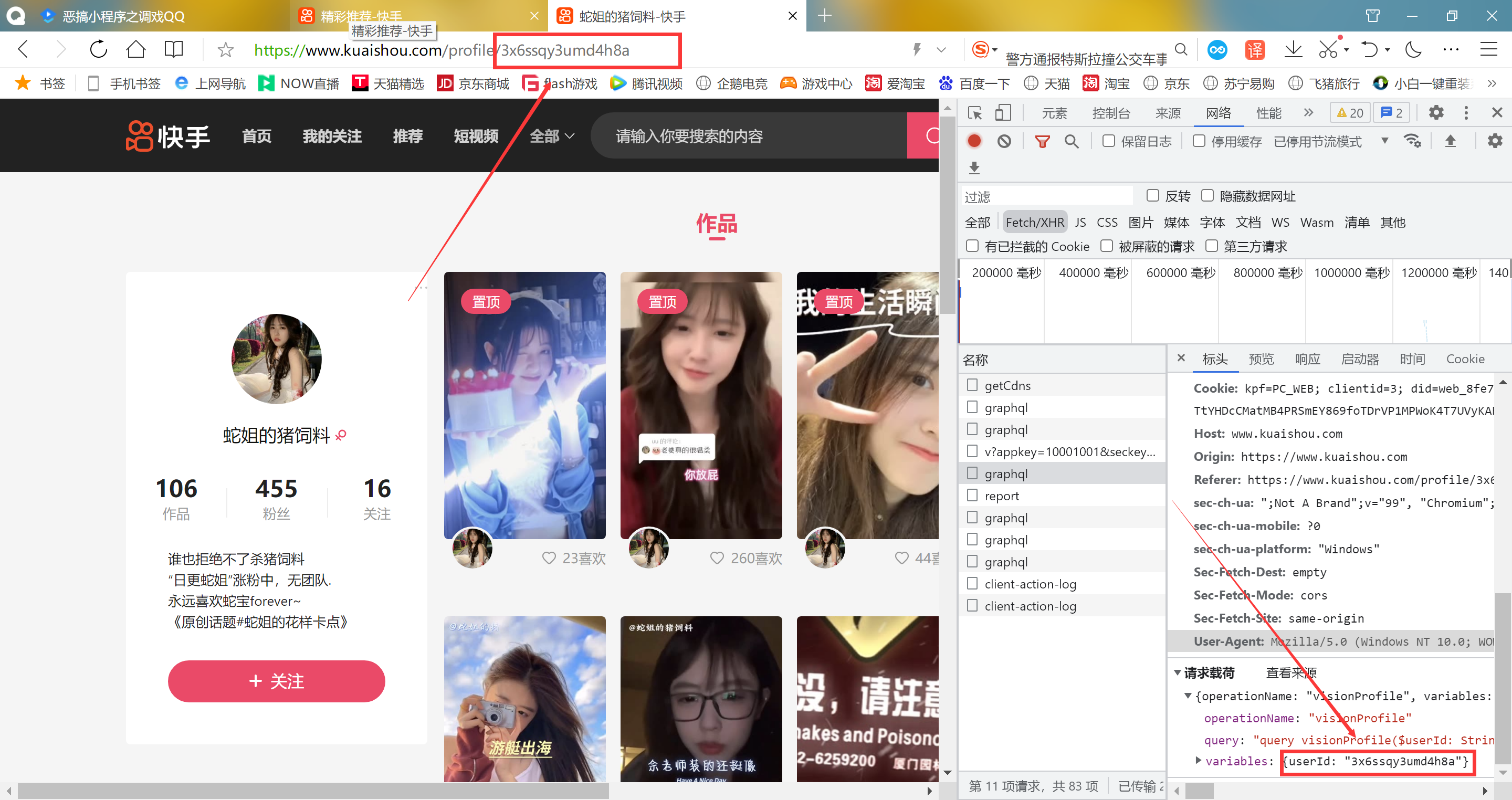

快手ID可在主页直接能看到,或者按F12键查看

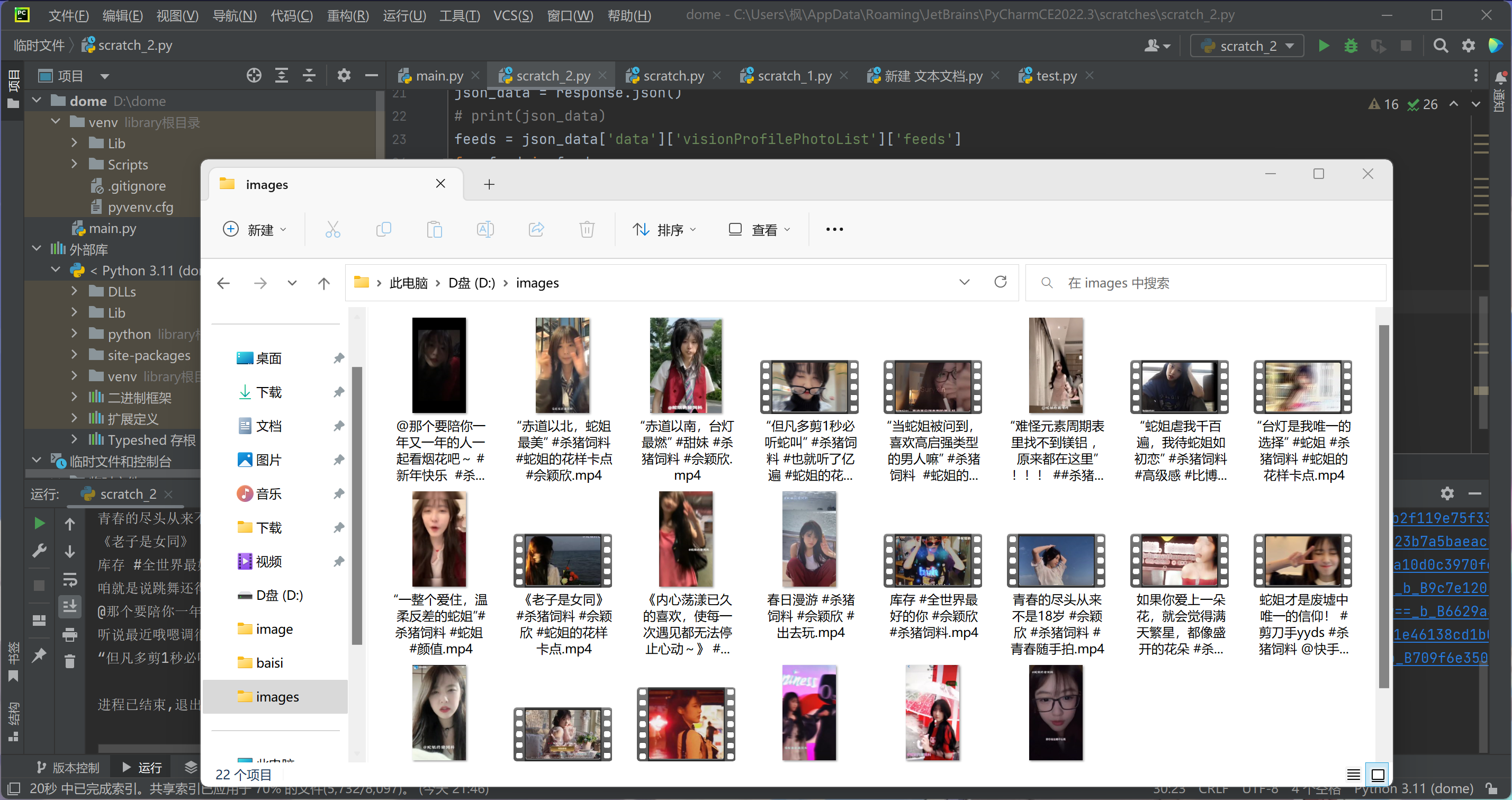

我这爬取的是蛇姐的视频

效果

注意事项

import requests (导入requests)

知识点

json (请求体)

headers (请求头)

re (正则表达式)

input (输入)

代码

import requests

import re

import os

id = input('请输入你想要爬取快手主页ID:')

# page = int(input('请输入你想要爬取页数:'))

def get_next(pcursor):

json = {

'operationName': "visionProfilePhotoList",

'query': "fragment photoContent on PhotoEntity {\n id\n duration\n caption\n originCaption\n likeCount\n viewCount\n realLikeCount\n coverUrl\n photoUrl\n photoH265Url\n manifest\n manifestH265\n videoResource\n coverUrls {\n url\n __typename\n }\n timestamp\n expTag\n animatedCoverUrl\n distance\n videoRatio\n liked\n stereoType\n profileUserTopPhoto\n musicBlocked\n __typename\n}\n\nfragment feedContent on Feed {\n type\n author {\n id\n name\n headerUrl\n following\n headerUrls {\n url\n __typename\n }\n __typename\n }\n photo {\n ...photoContent\n __typename\n }\n canAddComment\n llsid\n status\n currentPcursor\n tags {\n type\n name\n __typename\n }\n __typename\n}\n\nquery visionProfilePhotoList($pcursor: String, $userId: String, $page: String, $webPageArea: String) {\n visionProfilePhotoList(pcursor: $pcursor, userId: $userId, page: $page, webPageArea: $webPageArea) {\n result\n llsid\n webPageArea\n feeds {\n ...feedContent\n __typename\n }\n hostName\n pcursor\n __typename\n }\n}\n",

'variables': {"userId": id,

"pcursor": pcursor,

"page": "profile"""}

}

headers = {

'Cookie':'kpf=PC_WEB; clientid=3; didv=1674635897041; did=web_54165175f5c373e19c242d11ceb3ef8d; clientid=3; ktrace-context=1|MS43NjQ1ODM2OTgyODY2OTgyLjkxMTEyMjkzLjE2NzcwNDQyODg4NjkuNDM2MTI=|MS43NjQ1ODM2OTgyODY2OTgyLjY2Mjk5OTcyLjE2NzcwNDQyODg4NjkuNDM2MTM=|0|graphql-server|webservice|false|NA; userId=1448552402; kuaishou.server.web_st=ChZrdWFpc2hvdS5zZXJ2ZXIud2ViLnN0EqABt3Rt-pVFpZ2K5SHZmyf-AUQvEc1VXQ81OANu-3DgaSKfp4WAh3ye7vZ8Akwk_JpQrKWLCk-gwUggLx-AXF7BZdUkk1YGmfQD8wX_1PnPv98ci2mbG3tcQv-bh4nmlT_GoyU-rVJJYcbYr4dkvHB1ZqVkBQncSoE6S69zRvXpXZdb10ghQQ0FtNqqh3LK-Smv-VnwfFTVTV7hvi1QCSFP5xoS8JByODRPv5hk-B95zTquvFHcIiCMF1m41IUfUEaS0wGrg-j_7CtRXyNjx98sv_Q0IS1mGCgFMAE; kuaishou.server.web_ph=fb4a74bfebbe876abc88dacd5e9a29700ee3; kpn=KUAISHOU_VISION',

'Host': "www.kuaishou.com",

'Origin': "https://www.kuaishou.com",

'Referer': "https://www.kuaishou.com/profile/3x6ssqy3umd4h8a",

'User-Agent': "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.71 Safari/537.36 Core/1.94.190.400 QQBrowser/11.5.5240.400"

}

if not os.path.exists('D:/video/'):

os.mkdir(('D:/video/'))

url = 'https://www.kuaishou.com/graphql'

response = requests.post(url=url,headers=headers,json=json)

print(response)

json_data = response.json()

# print(json_data)

feeds = json_data['data']['visionProfilePhotoList']['feeds']

pcursor = json_data['data']['visionProfilePhotoList']['pcursor']

len_num = len(feeds)

for feed in range(0,len_num):

photoUrl = feeds[feed]['photo']['photoUrl']

originCaption = feeds[feed]['photo']['originCaption']

originCaption = re.sub('[\\/:*?<>|\\n#@)\》\."\《(\r]','',originCaption)

print(originCaption,photoUrl)

with open(f'D:/video/{originCaption}''.mp4',mode='wb')as f:

video = requests.get(photoUrl).content

f.write(video)

print('\n\n')

print('下载完毕!!!')

print('成功下载',len_num,'个')

if pcursor=='no_more':

return ''

get_next(pcursor)

get_next("")

评论 (0)